In this article I will present how to use SSE instructions in C++ (or C).

My goal is not to show how to write the fastest possible program using SSE but rather to introduce to its usage.

What is SSE ?

SSE stands for Streaming SIMD Extensions. It is a set of CPU instructions dedicated to applications like signal processing, scientific computation or 3D graphics.

SIMD is an acronym itself: Single Instruction, Multiple Data. A CPU instruction is said to be SIMD when the same operation is applied on multiple data at the same time.

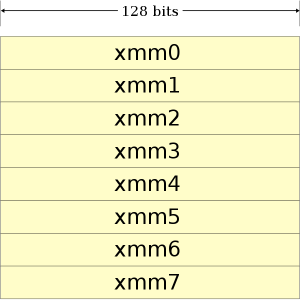

SSE was first introduced in the Pentium III in 1999. Over the time, this instruction set was improved by adding more sophisticated operations. Eight 128-bits registers were added to the CPU: xmm0 through xmm7.

Initially those registers could only be used for single-precision computations (i.e. for the type float). But since SSE2, those registers can be used for any primitive data type.

Given a standard 32-bit machine we can therefore store and process in parallel:

- 2 double

- 2 long

- 4 float

- 4 int

- 8 short

- 16 char

Note that integer types can be signed or unsigned, but you will have to use (sometimes) different instructions.

For instance if you have to compute the sum of two int arrays, using SSE you can compute 4 additions in a single assembly instruction.

Simple program

It is not easy getting started with SSE, fortunately the MSDN documentation is complete and well-written.

If you take a look at the list of arithmetic operations you can notice there is always a corresponding assembly instruction. Some operations on the other side (such as set operations) are composite operations.

Using SSE in C++ is indeed a very low-level construct: we will manipulate directly the 128-bits registers through variables of type __m128 for float, __m128d for double and __m128i for int, short, char.

But the good news is that you don't need to declare arrays of type __m128 in order to use SSE: for instance if you want to calculate the square root of a large array of float, you can simply cast your pointer to the type __m128* and then use SSE operations.

There is a tweak however, most SSE operations requires the data to be 16-bytes aligned, here we will use another variable attributes of gcc.

We use the align attribute:

aligned (alignment)

This attribute specifies a minimum alignment for the variable or structure field, measured in bytes.

Here is a simple code on how to use SSE in order to compute the square root of 4 float in a single operation using the _mm_sqrt_ps function.

float a[] __attribute__ ((aligned (16))) = { 41982., 81.5091, 3.14, 42.666 }; __m128* ptr = (__m128*)a; __m128 t = _mm_sqrt_ps(*ptr); |

The corresponding assembly instruction is SQRTPS, if we generate the assembly code of our program (using gcc -S) we can notice that this instruction is indeed used on a SSE register:

sqrtps %xmm0, %xmm0 |

Don't forget the include !

#include <emmintrin.h> |

First benchmark

In the previous program we computed the square root of 4 float simultaneously but the result was not stored in the array. For this purpose we use _mm_store_ps.

In the following program we compute the square root of a very large array on float. We use the timer class presented here in order to measure the execution time of the SSE version against the standard version.

void normal(float* a, int N) { for (int i = 0; i < N; ++i) a[i] = sqrt(a[i]); } void sse(float* a, int N) { // We assume N % 4 == 0. int nb_iters = N / 4; __m128* ptr = (__m128*)a; for (int i = 0; i < nb_iters; ++i, ++ptr, a += 4) _mm_store_ps(a, _mm_sqrt_ps(*ptr)); } int main(int argc, char** argv) { if (argc != 2) return 1; int N = atoi(argv[1]); float* a; posix_memalign((void**)&a, 16, N * sizeof(float)); for (int i = 0; i < N; ++i) a[i] = 3141592.65358; { TIMER("normal"); normal(a, N); } for (int i = 0; i < N; ++i) a[i] = 3141592.65358; { TIMER("SSE"); sse(a, N); } } |

In the SSE function we use two pointers containing the same address but of different types, it is of course not necessary but avoid casting. It is also interesting to notice that we must increment the __m128 pointer by only 1 (128 bits) whereas we must increment the float pointer by 4 (32 bits).

Another interesting function here is the posix_memalign function instead of the align attribute. The first function allocates aligned data on the heap whereas the gcc attribute allocates on the stack.

The benchmark was compiled with llvm-g++ 4.2 (flags: -O3 -msse2) and ran on a Intel Core2 Duo P7350 (2GHz):

$ ./sqrt 64000000

normal: 392ms

SSE: 145ms

Pretty fast indeed !

Second Benchmark

And now how to add two arrays of char together:

void sse(char* a, const char* b, int N) { int nb_iters = N / 16; __m128i* l = (__m128i*)a; __m128i* r = (__m128i*)b; for (int i = 0; i < nb_iters; ++i, ++l, ++r) _mm_store_si128(l, _mm_add_epi8(*l, *r)); } |

And the benchmark with full optimizations turned on:

$ ./add 64000000

normal: 98ms

SSE: 42ms

Performance considerations

You may ask why we don't have a 4-fold speedup when we compute the square root of 4 float in a single instruction and why we only have a 2-fold speedup when we add 16 char at the same time.

The answer is that your compiler is smart and performs a lot of optimizations, especially at the O3 level. In fact, if you take a look at the generated assembly code for the "normal" version of the sort and add functions you will probably notice that your compiler used SSE instructions behind your back. The compiler detected a loop pattern suitable for SSE and therefore decided to use dedicated instructions, however using directly the SSE instructions the generated code was even faster.

Depending on your compiler version, for such simple loops it is possible that you will notice no difference in execution time, but as mentioned, my goal was not pure performances.

Comments are closed.